Graphical Glitch Detection in Video Games Using Convolutional Neural Networks

Machine Learning

As part of his Master's Thesis at KTH Royal Institute of Technology and a six-month internship with SEED, Carlos Garcia Ling explored glitch detection for video games. You can download the thesis here.

The Premise

Detecting visual glitches in games is a challenging process. It requires perseverance and a well-trained eye. And while attentive video game testers might catch some glitches, they will miss others. Some glitches are obvious, but others are quite subtle and can happen within the eyeblink of a single frame. Finding visual glitches during production is vital so they can be fixed before release.

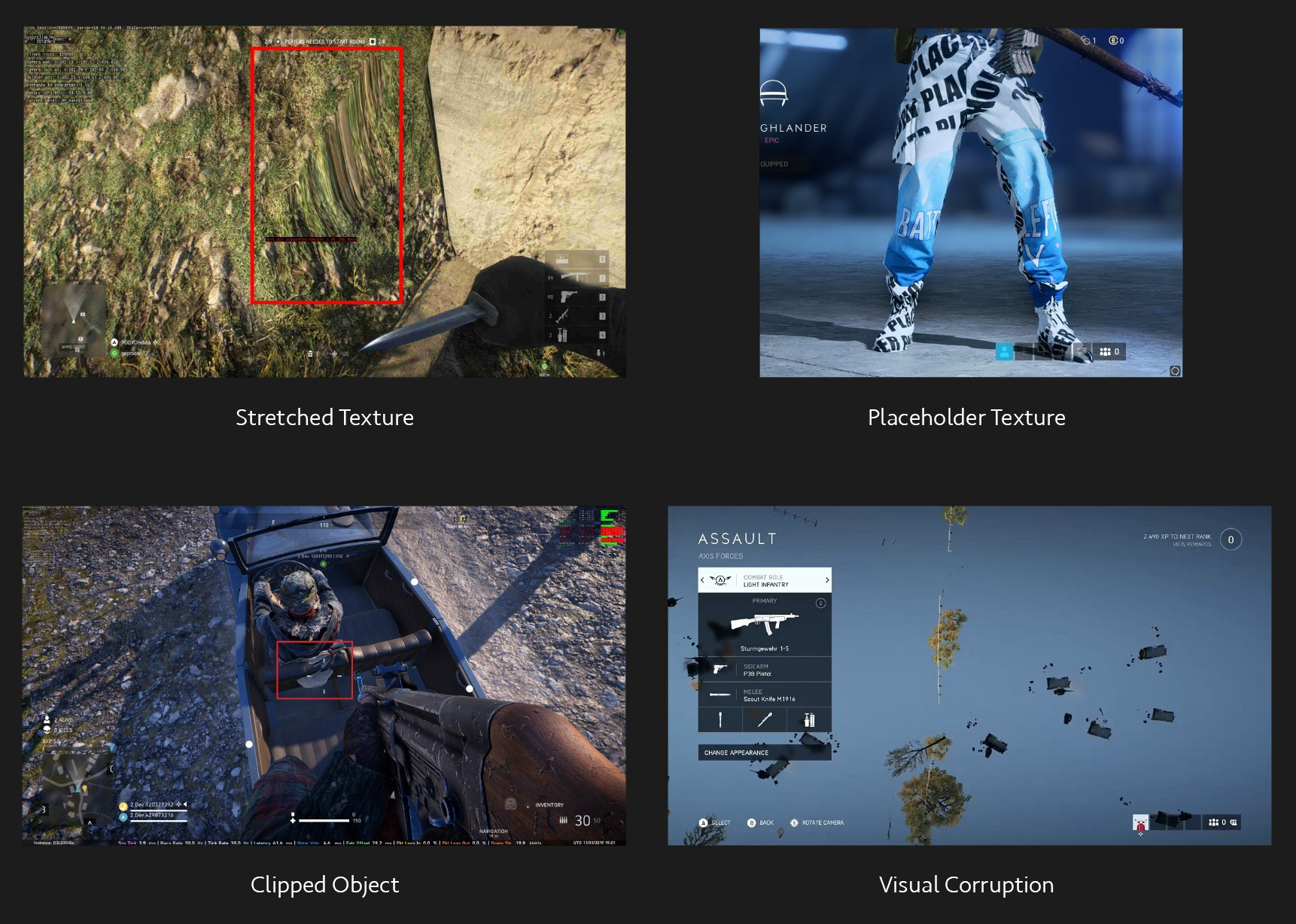

Examples of visual glitches found in video games.

Undeniably, visual glitches affect the overall quality of games and can detract from an otherwise great game experience. Although glitches won’t fix themselves, a system that automates the process of identifying and locating visual glitches greatly reduces the turnaround time for identifying and reporting visual bugs.

How It Works

Glitch detection makes use of a Machine Learning (ML) technique called Deep Convolutional Neural Networks (CNN) to automatically detect glitches during the testing phase of video game development.

Automatically identify glitches without human interaction.

The key requirements for this kind of ML algorithm are to avoid creating a large number of false positives and to be computationally lightweight so it can be run while testing.

Although many kinds of glitches are common in video games, this thesis focuses on texture-related glitches: missing textures, placeholder textures, low-resolution textures, etc. These are quite common and can be addressed easily.

Methods Used

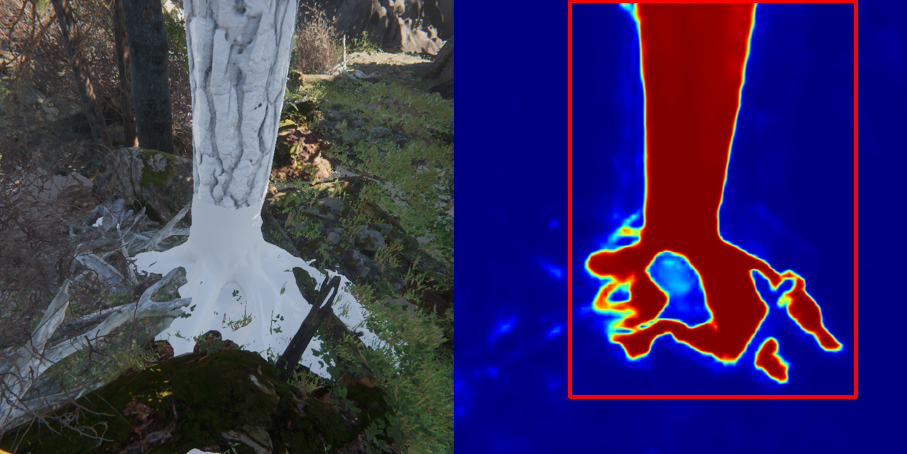

The methods used for the detector described in Ling's thesis employ Deep Convolutional Neural Networks due to their high success in other computer vision use cases. The desired result would be an indicator that flags the existence of a glitch, together with some sort of localization information that tells us where the glitch is found in the image.

The detector makes use of three approaches:

- Object Classification: Identify that the image contains an object from a class of known objects.

- Object Detection: Identify the approximate location of all the objects in the image.

- Semantic Segmentation: Identify the shape of the objects in the image by highlighting the individual pixels for each object.

The Object Classification approach works well but does not provide a lot of information regarding where the anomaly is located. Semantic Segmentation, on the other hand, does provide a lot of information regarding the position of the glitch. The Object Detection approach also shows promise, since it would provide an adequate level of localization information.

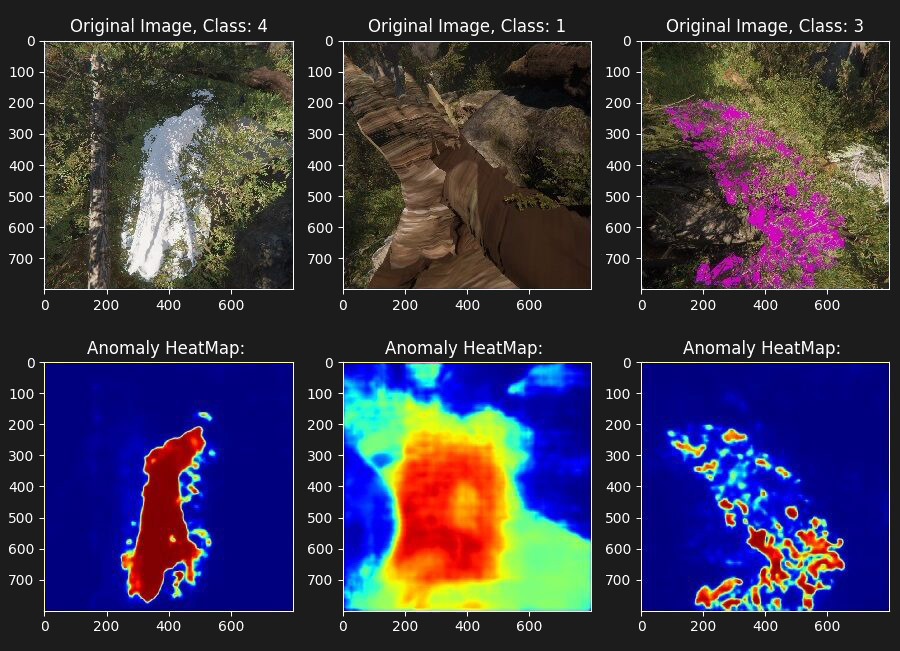

Semantic Segmentation. Original images (Top). Heatmaps showing the probability of each pixel of being anomalous, where red indicates a higher probability of an anomaly (Bottom).

The example above shows three different kinds of glitches:

- Class 4: Placeholder texture (white area)

- Class 1: Stretched texture

- Class 3: Missing texture (purple color)

For additional information on how CNNs can be used to detect visual glitches in video games, and ultimately improve the process of testing games, explore Ling's thesis here.