SIGGRAPH 2019

SEED

Next week at SIGGRAPH 2019, we will have 3 talks from developers at SEED sharing some of the latest results and work that our group has done.

The slides for the presentations will also be posted here on our page shortly after the conference.

State-of-the-Art and Challenges in Game Ray Tracing

(Part of Course: Are We Done with Ray Tracing Yet?)

Speaker: Colin Barré-Brisebois

Location: Room 152

Date: Sunday, July 28th

Time: 09:00-12:15

This course will take a look at how far out the future of ray tracing is, review the state of the art, and identify the current challenges for research. Not surprisingly, it looks like we are not done with ray tracing yet.

Course Schedule & Speakers:

- 09:00 - Are we done with Ray Tracing?, Alexander Keller (NVIDIA)

- 09:40 - Acceleration Data Structure Hardware, Timo Viitanen (NVIDIA)

- 10:15 - Game Ray Tracing: State of the Art, Colin Barré-Brisebois (Electronic Arts)

- 11:05 - Reconstruction for Real-Time Path Tracing, Christoph Schied (Facebook Reality Labs)

- 11:40 - From Rasterization to Ray Tracing, Morgan McGuire (NVIDIA)

Texture Level-of-Detail Strategies for Real-Time Ray Tracing

(Part of Ray Tracing Gems 1.1, presented by NVIDIA)

Speaker: Colin Barré-Brisebois

Location: Room 501 AB

Date: Wednesday, July 31st

Time: 14:00 - 17:15

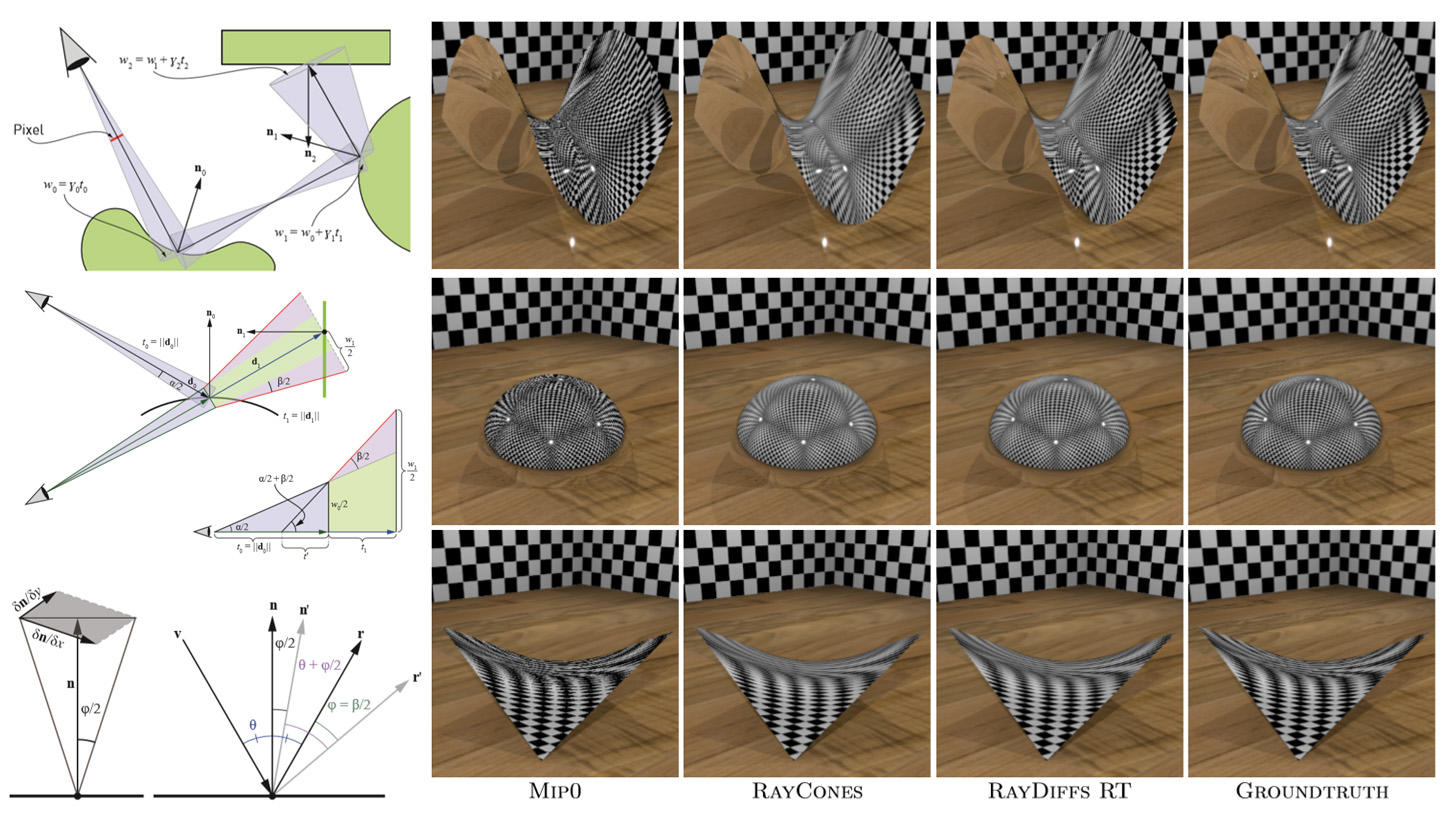

Unlike rasterization, where one can rely on pixel quad partial derivatives, an alternative approach must be taken for filtered texturing during ray tracing. We describe two methods for computing texture level of detail for ray tracing. The first approach uses ray differentials, which is a general solution that gives high-quality results. It is rather expensive in terms of computations and ray storage, however. The second method builds on ray cone tracing and uses a single trilinear lookup, a small amount of ray storage, and fewer computations than ray differentials. We explain how ray differentials can be implemented within DirectX Raytracing (DXR) and how to combine them with a G-buffer pass for primary visibility. We present a new method to compute barycentric differentials. In addition, we give previously unpublished details about ray cones and provide a thorough comparison with bilinearly filtered mip level 0, which we consider as a base method.

You can also read the paper here.

Course Schedule & Speakers:

- 14:00 - Introduction, Eric Haines (NVIDIA)

- 14:05 - A Simple Load-Balancing Scheme with High Scaling Efficiency, Alexander Keller (NVIDIA)

- 14:30 - Cool Patches: A Geometric Approach to Ray/Bilinear Patch Intersections, Alexander Reshetov (NVIDIA)

- 14:50 - A Microfacet-Based Shadowing Function to Solve the Bump Terminator Problem, Clifford Stein (Sony)

- 15:10 - Importance Sampling of Many Lights on the GPU, Pierre Moreau (NVIDIA, Lund University)

- 15:55 - Cinematic Rendering in UE4 with Real-Time Ray Tracing and Denoising, Juan Cañada (Epic Games)

- 16:25 - Texture Level of Detail Strategies for Real-Time Ray Tracing, Colin Barré-Brisebois (Electronic Arts)

- 16:50 - Improving Temporal Antialiasing with Adaptive Ray Tracing, Josef Spjut (NVIDIA)

Direct Delta Mush Skinning and Variants

Speaker: Binh Le

Location: Room 153

Date: Thursday, August 1st

Time: 09:00 - 10:30

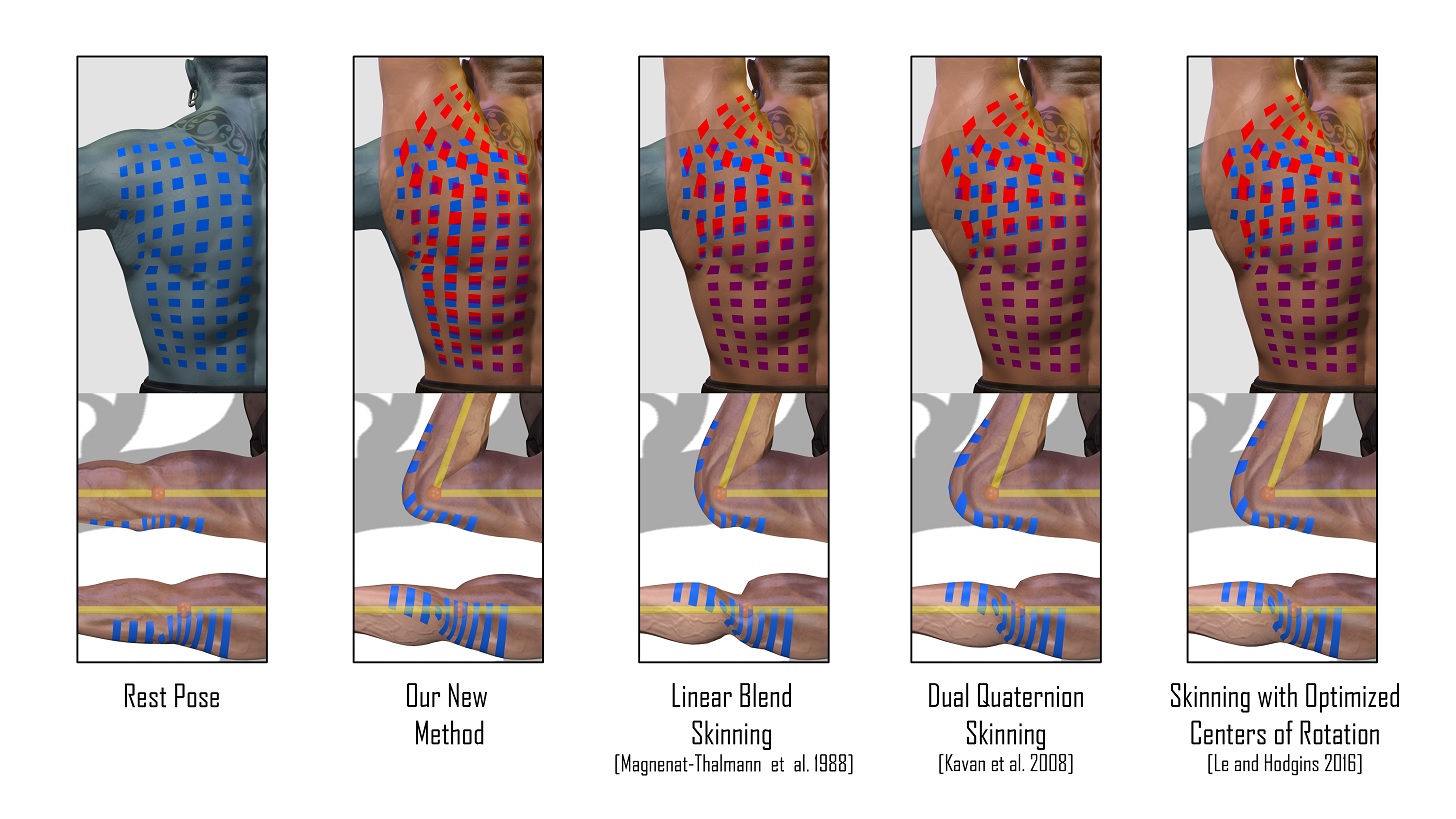

A significant fraction of the world’s population have experienced virtual characters through games and movies, and the possibility of online VR social experiences may greatly extend this audience. At present, the skin deformation for interactive and real-time characters is typically computed using geometric skinning methods. These methods are efficient and simple to implement, but obtaining quality results requires considerable manual “rigging" effort involving trial-and-error weight painting, the addition of virtual helper bones, etc. The recently introduced Delta Mush algorithm largely solves this rig authoring problem, but its iterative computational approach has prevented direct adoption in real-time engines.

This paper introduces Direct Delta Mush, a new algorithm that simultaneously improves on the efficiency and control of Delta Mush while generalizing previous algorithms. Specifically, we derive a direct rather than iterative algorithm that has the same ballpark computational form as some previous geometric weight blending algorithms. Straightforward variants of the algorithm are then proposed to further optimize computational and storage cost with insignificant quality losses. These variants are equivalent to special cases of several previous skinning algorithms.

Our algorithm simultaneously satisfies the goals of reasonable efficiency, quality, and ease of authoring. Further, its explicit decomposition of rotational and translational effects allows independent control over bending versus twisting deformation, as well as a skin sliding effect.

You can also read the paper here.