Direct Delta Mush Skinning and Variants

SIGGRAPH 2019

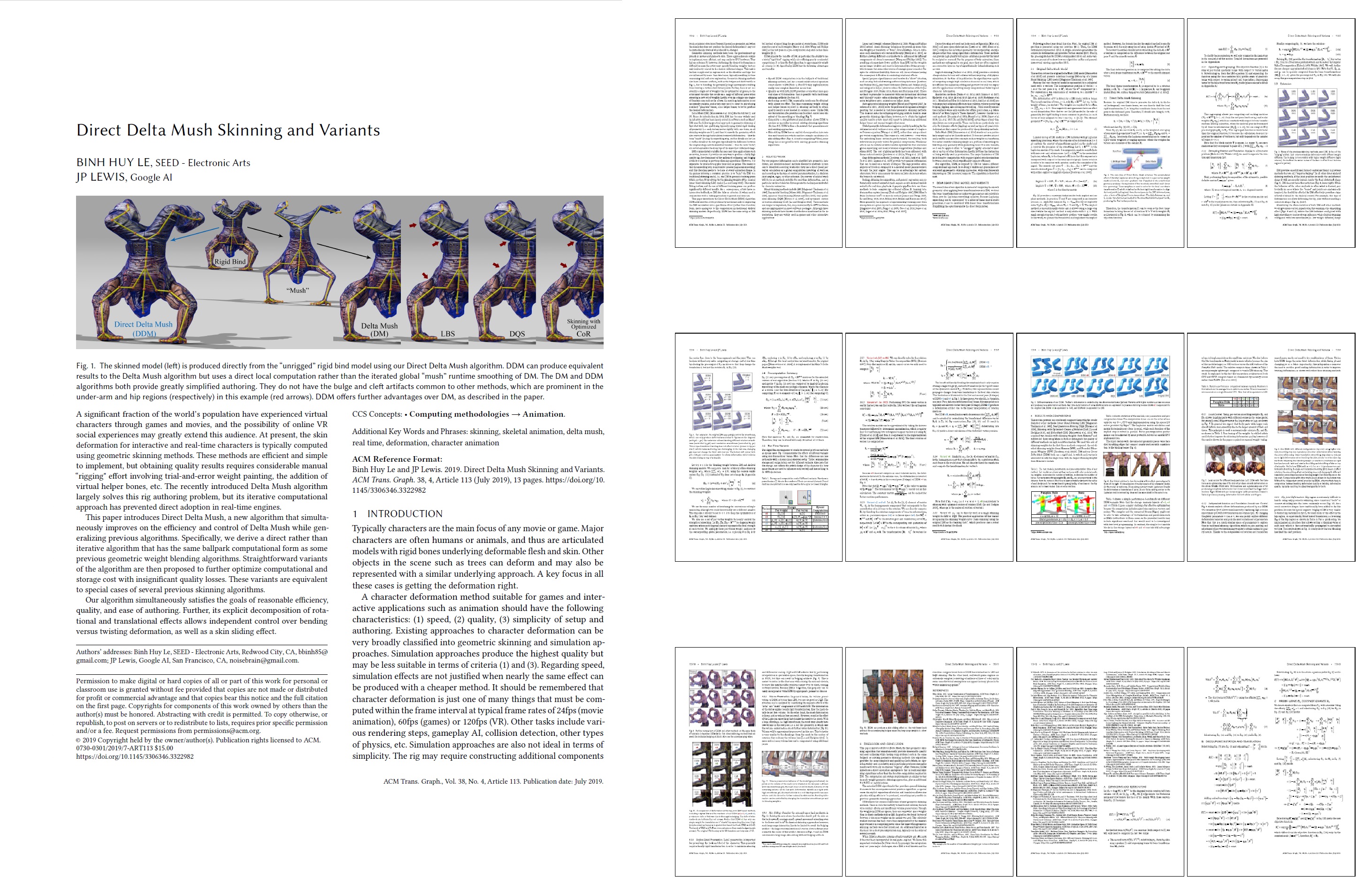

A significant fraction of the world’s population have experienced virtual characters through games and movies, and the possibility of online VR social experiences may greatly extend this audience. At present, the skin deformation for interactive and real-time characters is typically computed using geometric skinning methods. These methods are efficient and simple to implement, but obtaining quality results requires considerable manual “rigging" effort involving trial-and-error weight painting, the addition of virtual helper bones, etc. The recently introduced Delta Mush algorithm largely solves this rig authoring problem, but its iterative computational approach has prevented direct adoption in real-time engines.

This paper introduces Direct Delta Mush, a new algorithm that simultaneously improves on the efficiency and control of Delta Mush while generalizing previous algorithms. Specifically, we derive a direct rather than iterative algorithm that has the same ballpark computational form as some previous geometric weight blending algorithms. Straightforward variants of the algorithm are then proposed to further optimize computational and storage cost with insignificant quality losses. These variants are equivalent to special cases of several previous skinning algorithms.

Our algorithm simultaneously satisfies the goals of reasonable efficiency, quality, and ease of authoring. Further, its explicit decomposition of rotational and translational effects allows independent control over bending versus twisting deformation, as well as a skin sliding effect.

Binh Huy Le and JP Lewis. 2019. Direct Delta Mush Skinning and Variants. ACM Trans. Graph. 38, 4, Article 113 (July 2019), 13 pages. https://doi.org/10.1145/3306346.3322982

You can download the paper here: PDF (21.5MB)

You can also download the presentation:

You can also watch the presentation: